OLED TV technology has captured the imagination of video enthusiasts over the past few years. Just like plasma display panel (PDP) technology, OLED is self-emissive, meaning that every pixel can be turned on and off individually, contributing to the highly desirable picture quality attributes of absolute blacks and infinite contrast. At the CES 2017 consumer electronics trade show that’s going to take place in Las Vegas next month, more TV brands will likely join LG Electronics in unveiling their shiny new OLED televisions (with panels sourced from LG Display, the lone vendor of commercially viable TV-sized OLED screens).

As outstanding as OLED technology is, there lie a few challenges ahead, especially as the film and broadcast industry marches relentlessly towards HDR (high dynamic range) delivery. We’ve reviewed and calibrated almost every OLED television on the market throughout 2016, and while OLED reigns supreme in displaying SDR (standard dynamic range) material thanks to true blacks, vibrant colours and wide viewing angles (almost no loss of contrast and saturation off-axis), there have been some kinks with HDR.

Peak Brightness

Like it or not, peak brightness is a crucial component that’s central to the entire HDR experience. The ST.2084 PQ (perceptual quantisation) EOTF (electro-optical transfer function) HDR10 standard and Dolby’s proprietary Dolby Vision format go all the way up to 10,000 nits as far as peak luminance is concerned, although in the real world, Ultra HD Blu-rays are mastered to either 1,000 (on Sony BVM-X300 reference broadcast OLED monitors) or 4,000 nits (on Dolby Pulsar monitors).

Once calibrated to D65 (yes, HDR shares the same white point as SDR), none of the 2016 OLEDs we’ve tested in our lab or calibrated for owners in the wild could exceed 750 nits in peak brightness when measured on a 10% window (the smallest window size stipulated by the UHD Alliance for Ultra HD Premium certification). Due to the effects of ABL (Automatic Brightness Limiter) circuitry, most 2016 OLED TVs can only deliver a light output of around 120 nits when asked to display a full-field white raster, even though we’ll be the first to admit that such high APL (Average Picture Level) is extremely rare in real-life material.

This is the point where some misguided OLED owners will put forth the argument that “I don’t need more brightness; 300/ 400/ 500 nits already nearly blinded me” or “1000 nits? I don’t want to wear sunglasses when I’m watching TV!”. To clarify, this extra capacity of peak brightness is applied not to the whole picture, but only to selective parts of the HDR image – specifically specular highlights – so that reflections off a shiny surface can look more realistic, creating a greater sense of depth and insight. The higher the peak brightness, the more bright highlight details that can be resolved and reproduced with clarity, and the more accurate the displayed image is relative to the original creative intent.

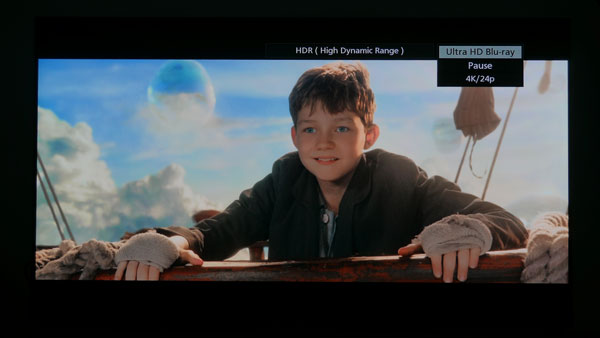

Without proper tone-mapping (we’ll explain this later), bright detail whose luminance value exceeds the native peak brightness of the HDR TV will simply be discarded and not displayed (technically this is known as “clipping”). Here’s an example from the 4K Blu-ray of Pan (which is mastered to 4000 nits) as presented on a 650-nit display with minimal tone-mapping:

Notice how the details in the clouds on the right of the screen, not to mention the outline of the bubble on the left were blown out, since the display was unable to resolve these highlight details. Here’s how the image should look with more correct tone-mapping:

As you can see, if tone-mapping is taken out of the equation, a ST.2084-compatible display’s peak brightness directly affects how much bright detail is present in the picture, rather than merely how intense the bright detail is. If you’ve seen and disliked crushed shadow detail on any television, clipped highlight detail is a very real problem at the other end of the contrast ratio spectrum too.

Tone-Mapping

We’ve used the term tone-mapping several times in the preceding paragraphs, so we’d better explain what it is. In HDR phraseology, tone-mapping has been used generically to describe the process of adjusting the tonal range of HDR content to be fitted on a TV whose peak brightness and colour gamut coverage are lower than the mastering display.

Since there’s currently no standard for tone-mapping, each TV manufacturer is free to use its own approach. With static HDR metadata (as implemented on Ultra HD Blu-rays as of Dec 2016), as a general rule of thumb a choice has to be made between the brightness of the picture (i.e. Average Picture Level or APL) and the amount of highlight detail retained. Assuming a HDR TV’s peak brightness is lower than those of the mastering monitor, attempting to display more highlight detail will decrease the APL and make the overall image dimmer.

Dynamic metadata with scene-by-scene optimisation should prove more effective in maintaining the creative intent, but here’s the bombshell: the higher a display’s peak brightness, the less the tone-mapping it has to do. We’ve analysed many different tone-mapping implementations on various televisions we’ve reviewed throughout 2016, and they invariably introduced some issues, ranging from an overly dark/ bright picture to visible posterisation at certain luminance levels.

Colour Volume

Besides determining the amount of highlight detail displayed from source to screen sans tone-mapping, peak brightness also has a significant impact on colour reproduction, more so in HDR than in SDR.

“But wait,” we hear you ask. “All UHD Premium-certified TVs should cover at least 90% of the DCI-P3 colour space anyway. Why should their colours be affected by peak brightness in any noticeable manner?”

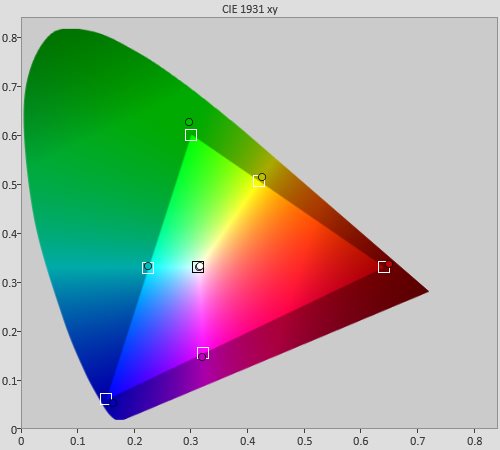

And therein lies the problem. For the longest time, colour performance in consumer displays has been represented using CIE chromaticity diagram which is basically a 2D chart, with the 1931 xy version (adopted by many technical publications including ourselves) generally used to depict hue and saturation but not the third – and perhaps most important – parameter of colour brightness.

|

| A typical CIE 1931 xy chromaticity diagram |

In other words, colour palette in imaging systems is actually three-dimensional (hence “colour volume”), but we’ve put up with 2D chromaticity diagrams for as long as we have precisely because of the nominal 100-nit peak white of SDR (standard dynamic range) mastering – taking measurements at one single level of 75% intensity (or 75 nits) was enough to give a reasonably good account of the colour capabilities of SDR displays.

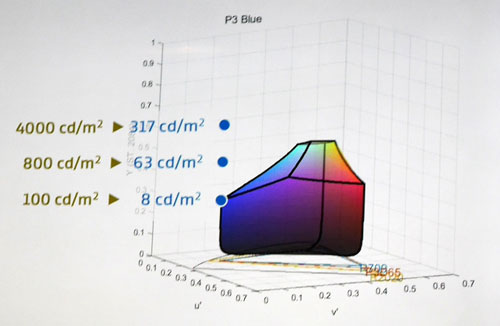

With HDR demanding peak brightness of 1000 or even 4000 nits, plotting 2D colour gamuts – essentially capturing only one horizontal snapshot slice from a vertical cylinder of colour volume – is clearly no longer sufficient. Consider a DCI-P3 blue pixel: on a 100-nit display, the brightest the pixel would get is 8 nits; whereas on a 4000-nit display it would be a very bright yet still fully saturated 317 nits! The blue pixel would look very different on a 100-nit display versus a 4000-nit display, even though it would occupy the same coordinates on a 2D CIE chart.

|

| Colour volume chart showing P3 blue primary at different nit levels (Credit: Dolby) |

Assuming the same 2D colour gamut coverage, it follows then that the higher the peak brightness, the greater the colour volume, and the more colours that are available in the palette for faithful reproduction of the creative intent. As an example, when we reviewed the Sony ZD9 which was capable of delivering 1800 nits of peak luminance, we ran a side-by-side comparison against a 2016 LG OLED whose peak brightness topped out at 630 nits. In the Time Square fight sequence in The Amazing Spider-Man 2 (a 4K BD which was mastered to 4000 nits), the Sony ZD9 managed to paint Electro’s electricity bolts (timecode 00:53:29) in a convincing blue hue, whilst they were whitening out on the LG OLED.

Can better tone-mapping compensate for reduced colour volume caused by lower peak brightness? The true answer is we don’t know: none of the 2016 OLEDs exhibited flawless tone-mapping (although LG did improve it as the year worn on), and there’s virtually no Dolby Vision content (which promises superior tone-mapping through dynamic metadata) in the UK beyond some USB stick demos at this time of publication, so we’ll have to wait until next year when more non-LG OLED TVs and Dolby Vision material hit the market before reaching any definitive conclusion. One thing’s for certain though – rendering colours that are both bright and saturated would be much easier with a high brightness reserve.

Final Words

For ages, videophiles all around the world have been pining for the success of OLED TV: with the promise of true blacks, vibrant colours, wide viewing angles and ultra-thin form factor, what’s not to like? And once LG Display managed to improve the yields of its WRGB OLED panels to commercially viable levels, OLED had looked set to become the future of television.

However, following the arrival and proliferation of HDR this year, things have suddenly become less clear-cut. In our annual TV shootout back in July, the Samsung KS9500 received the most votes from the audience for HDR presentation, followed closely by Panasonic DX900 ahead of the LG E6 OLED.

Don’t get us wrong: for watching SDR content, there’s nothing we would prefer over the 2016 lineup of LG OLED TVs (except perhaps Panasonic’s final generation of plasmas for their innate motion clarity). But HDR provides an undeniably superior viewing experience to SDR, and while HDR content remains thin on the ground, it’s growing in quantity and maturing in quality.

A film producer recently told HDTVTest, “You don’t know what HDR is supposed to look like until you’ve watched Chappie on a 4000-nit Dolby Pulsar monitor”. We certainly hope 2017 will bring OLED televisions with higher peak brightness, greater colour volume and better tone-mapping to fulfil the creative intent of HDR movies in a more accurate manner.

@Vincent Teoh:

Great article!

I still think the future of reference quality HDR is OLED’s, here’s why:

UNIFORMITY

From what I can gather the non uniform TFT manufacturing that produces near black non-uniformity in consumer OLEDs has been completely solved on the BVM-X300 through the use of big expensive LUT compensation processing chips and probably better manufacturing tolerances. I’d bet that mass production manufacturing tolerances and yields will continue to improve and that advanced image compensation processing chips could be integrated at consumer price points a few years down the line.

COLOUR ACCURACY

From what I can gather The X300 can accurately render render 100% D65 P3 throughout the entire luminance range using colour filters. With good processing I’d bet it will only be a four years max before this level of colour accuracy is available in high end consumer

products. Nanosys have shown interest in using blue OLEDs with QD filters for the red and green subpixels to achieve WCG, so I imagine other QD film makers will all be competing to bring the price of colour accuracy down.

COLOUR VOLUME

From what I can gather LG’s OLED panels render washed out colours at the high luminance range. I’m betting this is due to LG’s use of the WRGB layout where the white sub pixel is being used to improve peak white brightness. If this conspiracy is true, the strategy would allow WRGB OLED panels to be marketed with relatively high peak brightness specifications. These specs would not be directly comparable to LCD counterparts, which achieve their peak white figures through RGB sub pixels alone. In other words I’m suspicious that a 10% white window of 630 nits on a WRGB panel translates to a 10% fully saturated blue windows of 50 nits. And its these 80 nit and higher blue measurements that are vital to all that lovely saturated Electro bolt detail.

OLED TV sales are estimated to grow and with Panasonic, Sony and others getting OLED’s on the 2017 shelves for a piece of the pie. I’d bet by 2018 some of them will team up to manufacture their own Samsung style RGB layout panels with 80 nit or higher blues for sale a maximum of five years from now. OLED’s may never achieve the brightness of LCD’s and thus colour volume but self emissive RGB colour saturation and high contrast detail retention will make up for it.

GRADATION

Black crush and dither has bee plaguing OLED TVs but from what Panasonics been chatting about their 2017 production prototype OLED, precise gradation near black will take a significant step forward. And if we can get close to 10-bit accuracy near black the rest of the luminance range will be a walk in the park.

BRIGHTNESS, LIFESPAN & MOTION RESOLUTION

Since the first OLED displays brightness and lifespan has been steadily increasing and hasn’t slowed down. Given the growing market demand and the steady discovery of new OLED materials and invention of manufacturing improvements, I wouldn’t bet on OLED brightness and lifespan increases slowing down anytime soon.

It seems whenever a high motion resolution display tech is revealed weather its SED or Micro-LED the marketers make a big deal of the motion resolution. I think their is demand even from the general public for clearer images. All four major consumer VR HMD manufacturers use low persistence BFI OLED’s. So even consumers who grew up on sample and hold displays are getting a taste of high motion resolution. I’d bet any TV manufacturer who can show off BFI on the shop floor will be getting attention, especially from the growing market of gamers.

And with high brightness you can use BFI. If enough of us yell on these forums I imagine their will be OLED TV’s soon enough with a BFI mode for 120 nit peak SDR only sources, finally replacing your Panny plasma. But in time with ever more efficient OLEDs even HDR sources will get the high motion resolution treatment. The X300 has a scrolling scan BFI mode, not sure if it’s bright enough for HDR but its early days yet. The X300 can even do true pulsating interlaced rendering if you want to avoid jumpy bob deinterlacing or traditional artefact ridden deinterlacing.

MARKET DOMINANCE

We all new Plasma was king in the late noughties but the plebs thought the wall mounted PDP’s popularised in the 90’s were for grandpa. They wanted the newer, more expensive and thus aspirational LCD or better yet an “LED” TV of the future. I think the general consumer has got wise to the marketing of LED, 3D, & Curved Display. And I believe SUHD and Quantum Dots won’t have the same pull. I don’t have numbers but I often come across game reviewers in the UK and US mentioning how happy they are with their HDR LG OLED’s this year. This suggest to me that despite current issues OLED is already drawing more eyeballs than Kuro’s or VT60’s ever did and has become the new aspirational display.

I’d bet developments in inkjet manufacturing and the R&D cost spreading over mobile and wearable product lines will make OLED prices competitive with mid tier LCD’s within four years. While Samsung has talked about skipping OLED for QLED, the lifespan of vital blue QLED’s are still way behind that of OLED’s so it is unlikely to take traction away from OLED sales for the foreseeable future.

While the uniformity issues and lack of colour volume on current WRGB OLED’s might not be enough for us picture enthusiasts to hand over our cash its the plebs who drive sales, and they just want to say they have an OLED and ooh-aah at the pure blacks. And it’s they who will fund the R&D for a new generation of reference status displays.

ENTHUSIAST ACCEPTANCE

I’d bet the biggest and last hurdle for plasma replacement is input lag. I don’t know how fast the Sony BVM monitor chips are but I doubt they have VT60 level input lag for gamers. And given that the major semiconductor manufactures have said they don’t have the technological means to mass manufacture faster computer chips for the foreseeable future (clock speed/ power density limitations) some very smart engineering might be required to accommodate uniformity with low lag on self emissive TFT based displays.

Another great stuff from you guys but please replace these tiny comparison pics, few months ago you listed to use and used high res pics, please keep using high res pics to allow us to compare normally the pictures :)

You highlighted OLED problems with HDR but obviously it’s not cheap to keep 1 TV for HDR and 1 for SDR so OLED is still the best choice and HDR can’t be that bad on it :)

An interesting technical article, thank you.

The only problem I have is that your average consumer reading this article would perhaps garner the impression that they are better off with a brighter LCD TV for HDR material due to the limitations you are “highlighting”.

I’m of the opinion this couldn’t be further from the truth.

I own a panasonic dx902 and the problems an OLED encounters with bright highlights pale in comparison with the struggles my set encounters with darker content.

The backlight is a flickering, halo-ridden mess on any low apl scenes. Panning shots are accompanied by some pseudo-banding that occurs as the LEDs switch on or off whenever something bright is next to dark. At times it’s completely unwatchable.

I’d also be interested to know what the respective colour accuracy is like in real-world HDR content at LOW nits of brightness for LCD vs OLED.

As far as I can tell, my set can’t properly saturate colours in darker areas of mixed content unless the local dimming is maxed out (which results in unacceptably awful dimming artefacts)?

HDR itself is in a state of flux…this article is little more than a mountain of FUD.

Oh burn….Von’s hiber here reading what I already knew….oleds are OVERRATED….perfect black and only perfect black is where oleds shine…guess what, movies have millions of shades of black and grey, and unless the film is all space shots, your hit with the same problem as led tvs….only now you have low brightness and IR!!!!!this made my day

Sincerely

Von’s hiber

@morgs: The Sony X300 uses RGB OLED architecture which is a totally different beast from the consumer WRGB variant. Hence the $30,000 price tag.

@Adam M: Good point.

@davejones: The Sony ZD9 and to a lesser extent the Samsung KS9500 have better local dimming control than the Panasonic DX902. Interesting you should mention low-APL colour saturation, because Panasonic has done a lot of work in this area with their internal 3D LUT mapping, at least for SDR.

@Vin: Which part of ITU’s BT.2100 spec which ratified Hybrid-Log Gamma (HLG), and SMPTE’s ST.2084 and ST.2086 documents are in flux? The standards are already in place.

Warmest regards

Vincent

Vincent

Interesting article – one thing I do see in your Pan picture is a load of dark crush. The dark detail has been completely lost in his jacket and the wooden side of the boat he is leaning over. I am guessing it doesn’t look like this in the flesh …

@Vincent Where does dynamic metadata HDR fit into all those acronyms? Only Dolby Vision has a working solution now that properly scales to your TV’s capabilities. TV capability (even LCDs) is also in flux, so whatever you buy this year or next will be outshined in the nit race by the following model years (10,000 is the end game based on Dolby’s own lab experiments). Finally, what you seem to conveniently ignore is that the brighter the specular highlights that are required, the more they become fraught with blooming and haloing artifacts as the inferiority of LCD and its backlighting scheme is revealed plainly for all to see (if they’re not squinting by that point).

How much money (Sponsoring) you get from Samsung for writing this aticle?

You can fool some people for some time, but not all the people for the all time!

LCD is death. Face it!

@jjames: Because the dynamic range of my camera was not as high as the TV’s, I had to lower the exposure to fully capture the highlight detail. In real life, there was no dark crush, and the shadow detail were clearly visible.

@Vin: Dynamic metadata hasn’t been standardised yet, but ST.2084 and HLG have. I can only judge by what standard is established at the moment. There will always be another new standard/ technology forthcoming, and many people can’t keep waiting around forever… personally I certainly enjoy a better experience with HDR content with static metadata than any SDR content available on the market at the moment.

Re Dolby Vision having a properly working solution, are you going by theory, other people’s experience, or have you done a proper comparison with real-life material yourself?

Re specular highlights, most of the times they would appear in higher APL scenes with non-black background, so no blooming/ haloing artefacts.

Re squinting, please re-read the paragraph debunking the misconceptions of “misguided OLED owners”.

@Josh: I haven’t been paid by Samsung to write this article.

And until OLED reaches price parity, or is available in smaller screen sizes (less than 55 inches), LCD will continue to be around.

Warmest regards

Vincent

I always enjoy these detailed articles, but the impression is always left that the Panasonic plasma legacy is hard to break free from until absolute reference perfection reaches the HDTVTest benches. From a consumers point of view, the aspiration is usually replaced by pragmatic consumer choices like price, overall appearance, size, and whether the picture seen in the store suits the individual tastes of the customer, this is not a straightforward process as most panels in certain price ranges that are attractive to buyers look much the same. OLED has to become that something extra to sell adequate numbers but it still has not grabbed the consumers imagination as LG would like it is still too new and too expensive and more importantly it is almost designed to value add with compatible products and technology and professional calibration to get the best picture, which is a real negative for average people just looking for a new generation product to plug and play, the truth is it is not that easy to get full value for your investment with high end panels, they are not user friendly if you are looking for something special out of the box. The simpler LEDs will remain best sellers for a while yet until a functional calibration app comes with the TV to automatically adjust it properly upon first set up.

@ Mr. Teoh: Yes, but it doesn’t apply to me and I’m not misguided in the least…I am coming from experience with viewing HDR in the home. Those areas of extra brightness like even significantly sized windows cause me to visibly squint. As much as I have been impressed by a few HDR sources, black out room viewing and HDR don’t mix particularly well. I find reference 3D content on a passive OLED to be the most stunning visually speaking.

1000-nit OLEDs are purportedly coming next year, so it makes all this windmill-tilting towards LCD pretty hollow.

And as to your article, the G6 can output at well above 600 nits, hence why it is absolutely blinding in a dark room. It would seem to me the only way to fix that is to calibrate the entire display at a much dimmer baseline or watch content that is properly graded for your panel’s capabilities.

On another note, you mentioned 10% windows but failed to acknowledge that the OLEDs can actually sustain more brightness longer than sets from Samsung and Sony: http://www.rtings.com/tv/tests/picture-quality/peak-brightness